AI tools are entering the workplace faster than most IT teams can respond. What began as experimental use cases is now widespread day-to-day reliance and often without oversight.

We surveyed IT and security professionals to understand how their teams are approaching AI: what tools they're using, how policies are evolving, and where the biggest gaps and pressures lie.

The bottom line: While 76% of organizations allow all employees to use AI tools, only 39% have well-defined policies with clear use cases. The result? A perfect storm of shadow IT, data leakage concerns, and compliance headaches that IT teams are scrambling to address.

This report surfaces one clear theme: AI is not just another trend to manage - it's a shift in how work gets done. And IT's role isn't about restriction. It's about redefining enablement, visibility, and safety at scale.

Who We Heard From

Our survey captured perspectives from IT and security professionals across organizations of varying sizes. The majority of respondents (44%) identified as IT Admins, followed by IT Managers and Directors (38%), and Security/Compliance professionals (12%).

Most represented small to mid-sized organizations: 42% work at companies with 1-100 employees, while 38% are at organizations with 101-500 employees. The remaining respondents came from larger enterprises, with 10% at companies with 501-1,000 employees, 7% at organizations with 1,001-5,000 employees, and 3% at companies with 5,000+ employees.

AI governance is a challenge for companies of all sizes but it’s especially urgent for smaller IT teams, who have to juggle innovation and security without the resources of large enterprise IT and security teams.

The Current State: Wide Adoption, Loose Control

The survey paints a picture of AI tools becoming as common as email in modern workplaces. 77% of organizations allow all employees to use AI tools, with only 1% blocking them entirely. This represents a dramatic shift in enterprise software adoption. Such rapid, organization-wide deployment of a new technology category is really rare.

Despite concerns about data leakage, compliance, and tool sprawl, AI is widely in use:

- More than 80% of respondents said they personally use AI tools at least occasionally.

- Top tools include: ChatGPT, Google Gemini, Microsoft Copilot, Claude, Notion AI, and Github Copilot.

- Many mentioned multiple tools in daily workflows: writing, scripting, troubleshooting, note-taking, and planning.

As one respondent described their usage: "I use AI in everything I do. From summarising documents to drafting policies to writing code”

Another noted using AI for "brainstorming, talking through ideas, primary drafting from notes, improving sheets formulas, scripting, and more."

But this reliance brings new questions: How do we verify outputs? What training is required? When is automation helpful and when is it risky?

And policy hasn't kept up:

- 38% of organizations have a formal AI usage policy that's clearly defined, but 32% admit their policies are “loosely enforced”.

- Around 17% are developing an AI policy, but 13% admit they have no policy in place at all.

- Nearly 50% say tools are accessed through personal accounts or a mix of sanctioned/unsanctioned methods.

One respondent put it: "Our CEO gave everyone a budget to buy whatever tools they want. No oversight." This gap between adoption and governance creates the perfect conditions for shadow IT to flourish.

The Challenges: Shadow AI, Visibility, and Control

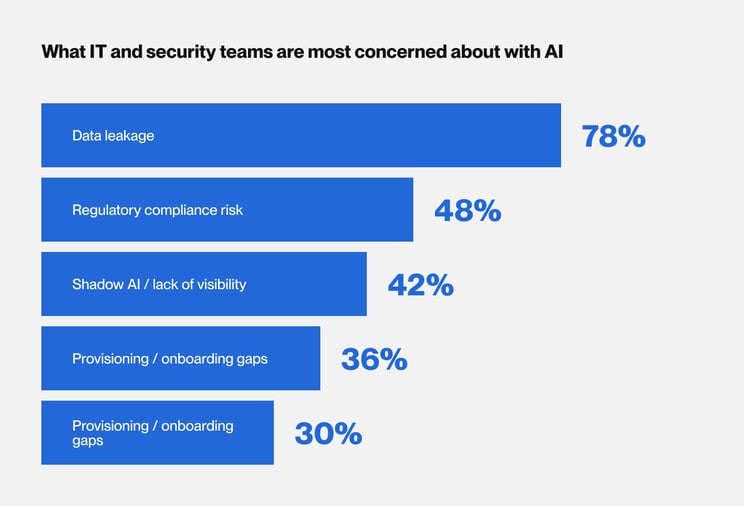

When asked about their primary concerns, 78% cited data leakage as their top worry - significantly outpacing other security considerations. This is followed by compliance or regulatory risk (48%) and shadow AI/lack of visibility (42%).

These concerns are well-founded. Multiple respondents highlighted specific risks around proprietary information being absorbed by large language models, with one noting: "Company IP is being absorbed by the LLMs." Another emphasized the challenge of "preventing staff from putting client data into tools without mature privacy measures/guarantees."

From open-text answers, some consistent themes emerged:

- Shadow AI is real and growing. Teams described browser extensions, rogue SaaS signups, and note-taking tools that show up unannounced in meetings.

- Lack of visibility is a top concern. Admins can't protect what they can't see and many tools bypass MDM, SSO, or traditional monitoring setups.

- Policy and process gaps leave IT reacting instead of guiding. "We don't have a centralized management platform for AI. We're working on it,” said one respondent.

Some teams reported investing in enterprise tools with SSO or auditing features but others said price, complexity, or unclear ROI were blockers. As one respondent noted: "Price plans with SSO are expensive. We want to use many different tools to use the best for the use case and also personal preference."

The Training and Awareness Gap

Nearly 30% of respondents identified lack of user training or awareness as a significant concern. This aligns with the qualitative feedback, where "training" emerged as the most frequently mentioned solution. As one respondent advised: "Training is the most critical thing you can do."

The challenge isn't just technical. Organizations are struggling to help employees understand both the benefits and risks of AI tools. Several respondents mentioned the need for comprehensive education programs that go beyond simple do's and don'ts.

What IT Teams Are Doing About It

Current Safeguards: Policy-Heavy, Tech-Light

The most common safeguard is internal policy or usage guidelines (73%), followed by security training for AI use (52%). However, technical controls lag behind: only 36% have implemented OAuth or SSO-based monitoring, and just 33% are blocking tools via MDM or firewall.

This policy-first approach may reflect the speed at which AI tools have proliferated - it's easier to write a policy than to implement comprehensive technical controls across diverse AI platforms.

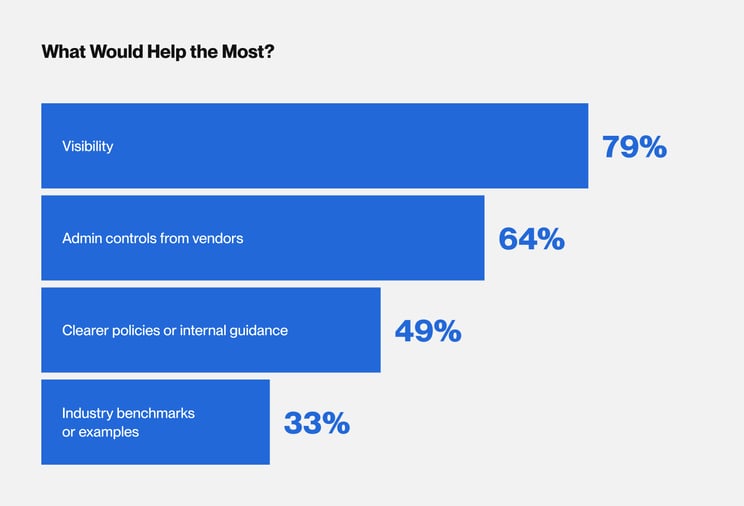

What Would Help: Visibility Above All

When asked what would help them manage AI more effectively, 79% of respondents wanted better visibility into usage. This was followed by stronger admin controls from AI vendors (64%) and clearer policies or internal guidance (49%).

The visibility challenge is particularly acute given the browser-based nature of most AI tools. Unlike traditional SaaS applications that can be easily monitored and controlled, AI tools often operate through standard web interfaces, making them harder to track and govern.

Industry Perspectives: What IT Leaders Are Saying

Survey responses ranged drastically from playing it safe to fully embracing AI:

The Embracers: "Embrace it. As an org you will be left behind if you do not. But provide the training, policies, tools, etc. Make sure people understand that the org is still in charge."

The Realists: "People are going to use AI whether you want them to or not, so find out how you can manage it safely to minimize harm/risk."

The Overwhelmed: "Good luck. Web access alone is all users need. They will find it."

The Strategic: "You need strong policies and warnings from the executive level (and reinforced by senior leadership) dictating how AI should be used via officially sanctioned apps only."

Recommendations for IT and Security Teams

Start with Visibility

Before you can manage what you can't see, invest in tools and processes that provide visibility into AI tool usage across your organization. This might include DLP solutions that can detect AI-related traffic, browser monitoring tools, or even simple surveys to understand current usage patterns.

Develop Comprehensive Policies But Make Them Practical

While 71% of organizations have AI policies, the gap between policy existence and policy effectiveness is clear. Focus on creating policies that are:

- Specific about approved tools and use cases

- Clear about what data can and cannot be shared

- Regularly updated as the landscape evolves

- Backed by technical controls where possible

Invest in Education, Not Just Restriction

The most successful organizations appear to be those that combine clear boundaries with comprehensive education. Help employees understand not just what they can't do, but why certain restrictions exist and how to use AI tools effectively within those boundaries.

Plan for the Long Term

As one respondent noted: "Generally speaking, AI is a fad and most of it will pass in time. What remains will become part of our regularly managed tools." We’re not quite sure about that. While AI hype may fade, AI capabilities will become embedded in the tools we already use. Plan governance frameworks that can adapt as AI becomes less of a separate category and more of a feature.

Looking Ahead

The AI governance challenge isn't going away - it's evolving. As AI capabilities become embedded in existing enterprise tools (Microsoft 365, Google Workspace, Salesforce, etc.), the challenge will shift from managing standalone AI tools to governing AI features within trusted platforms.

Organizations that establish strong governance frameworks now, while AI tools are still largely external and identifiable, will be better positioned to maintain control as AI becomes more pervasive and integrated into their existing technology stack.

The survey results make one thing clear: AI in the workplace is no longer a question of "if" but "how." The organizations that figure out the "how" part, balancing innovation with security, enabling productivity while protecting data, will be the ones that thrive in an AI future.

This report is based on a survey of IT and security professionals conducted by Kandji in June 2025.